Resources

for You and Your Team

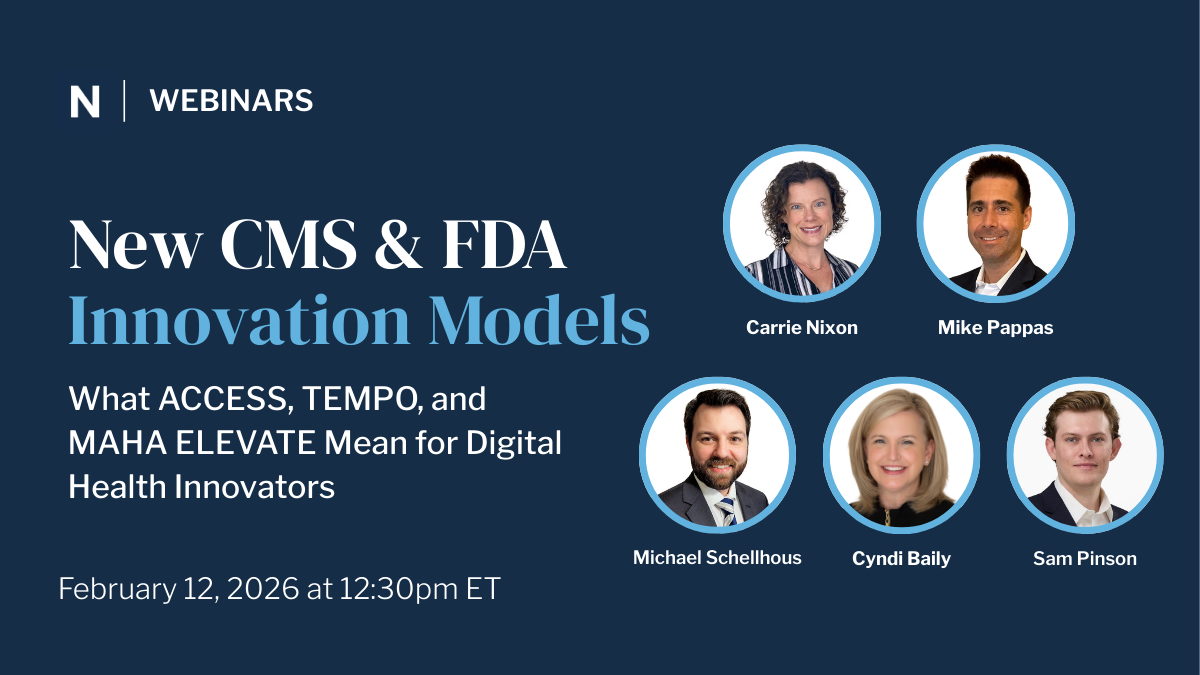

New CMS & FDA Innovation Models: What ACCESS, TEMPO, and MAHA ELEVATE Mean for Digital Health Innovators

CMS and FDA introduced ACCESS, TEMPO, and MAHA ELEVATE, three major innovation models that signal a shift in how federal agencies are thinking about digital health, care management, and reimbursement. In this webinar, Nixon Law Group’s attorneys break down what each model does, what types of entities can participate, and where we see concrete opportunities for digital health companies to engage.

Digital Mental Health Technology - The Quick Breakdown

In this rapid-fire breakdown, Nixon Law Group Senior Counsel Reema Taneja and Digital Health Expert Michael Schellhous cut through the noise to explain the critical shifts in Digital Mental Health Treatment (DMHT) codes.

They cover what the DMHT codes actually reimburse, how they fundamentally differ from Remote Patient Monitoring (RPM) and Remote Therapeutic Monitoring (RTM), and the strict FDA/CMS requirements your device must meet. They unpack the major hurdles—from the lack of a national payment amount (MAC contractor pricing) to provider uptake challenges—and advise founders on how to position their companies for success despite these limitations.

This video is an essential resource for founders looking to understand the pathway to reimbursement and influence the future of digital mental healthcare policy.

2026 Medicare Final Rule Part 1: Rapid-Fire Q&A on RPM and RTM Codes

The 2026 Medicare Physician Fee Schedule (MPFS) Final Rule introduces some of the most consequential updates for Remote Physiologic Monitoring (RPM) and Remote Therapeutic Monitoring (RTM) since their inception. In Part 1 of our rapid-fire video series, health law experts Carrie Nixon and Reema Taneja break down the most significant changes impacting digital health innovators and care management companies.

2026 Medicare Final Rule Part 2: Rapid-Fire Q&A on Upstream Drivers of Health

The 2026 Medicare Physician Fee Schedule Final Rule confirms CMS’s strong push toward whole-person care, creating new reimbursement pathways for models focused on nutrition, physical activity, and social supports. In Part 2 of our series, Mike Pappas and Olivia Goldner analyze the updates to "Upstream Drivers of Health."

2026 Medicare Final Rule Part 3: Rapid-Fire Q&A on FQHC and RHC Bundled Code Unbundling

Rural Health Clinics (RHCs) and Federally Qualified Health Centers (FQHCs) face mandatory billing changes in the 2026 Medicare Physician Fee Schedule (MPFS) Final Rule. In Part 3 of our series, Stephanie Barnes and Sam Pinson break down the crucial compliance and billing updates that will affect virtual care and care management services in these settings.

MSO-PC Models: Digital Health Compliance and Scaling

Navigating the complex legal landscape of digital health requires a robust and compliant corporate structure. In this video, Nixon Law Group Senior Counsel Reema Taneja provides a comprehensive 101 on the Managed Service Organization (MSO) – Professional Corporation (PC) model, focusing on the critical regulatory hurdles of the Corporate Practice of Medicine (CPOM) and the Anti-Kickback Statute (AKS).

Cracking the Code on AI Compliance: A Competitive Edge for Digital Health Innovators

Learn how to turn AI compliance into a strategic advantage. This webinar breaks down evolving regulations and what digital health innovators need to stay ahead.